An old GPU put to use for new LLMs

Where are all the Graphics Processing Units (GPUs)? There’s probably one in your computer or phone. Its size depends on the amount of graphics processing you want to do with it. Scrolling text media - small, integrated graphics processor. Generating silly poems about dogs with jobs from ChatGPT - a building filled with servers, IT professionals, and maybe close to a large body of water for cooling. Wait that doesn’t sound like graphics processing? It’s not fate that the mathematics used for rendering 3D graphics like Fortnite or Disney movies is also used for machine learning.

Linear algebra is an incredibly expressive framework for representing complex systems. The boon of cryptocurrency and crypto mining, and now deep learning, is continuing to raise prices for GPUs wanted by gamers - who want them for graphics - is funny to me. Computer graphics are a bunch of translation and rotation operations of points in 3D space that are projected onto your 2D screen. These operations are linear algebra-heavy. Nvidia, the world leader in GPU design, knows this and has made it as easy as possible to write software for GPUs so they can do linear algebra in these lucrative ways.

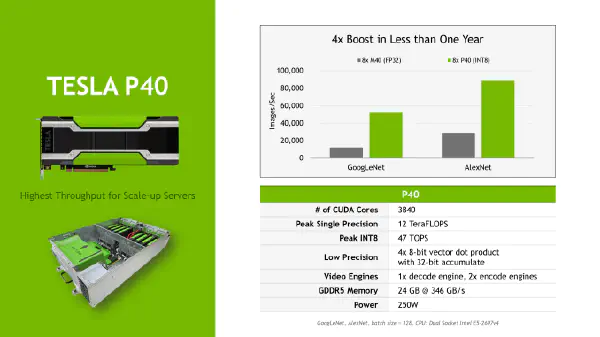

The paper Attention is All You Need was a turning point in Deep Learning which allows for parallelization of training and inference for sequential data like text. The Attention architecture is what makes Large Language Models like ChatGPT possible. This paper was released in 2017, a year after the announcement of the Nvidia Tesla P40.

Large Language Models Are Large

The weights of a model are the numbers that are combined with input data over many steps to produce an output. Training is finding the weights that produce the desired output. GPT-4 has trillions of parameters of a secret size and architecture. That means the model is many GBs large. Not only is storing the model on your hard drive tough but it must also be moved to your RAM when you want to use it to compute. I won’t pretend to have the hardware that OpenAI does, so let’s consider a model designed for a single consumer: Deepseek Coder. This model has been trained for coding tasks and was chosen from the EvalPlus Leaderboard for its performance and size. This model has 6.7 billion parameters, which takes about 14 GB of VRAM. This size is the limiting factor for inferencing on a GPU. I built my current PC in 2020 using part of my COVID bucks with robot simulation, machine learning, and gaming requirements. I settled on a Nvidia GeForce 1660 Super for around $230. It turns out the prices haven’t changed much. With a measly 6GB of VRAM, this GPU won’t do.

So, I scan Wikipedia’s table of Nvidia GPUs. First, sort by Memory Size, then peek at the Launch date. Hmmm, 2016? 24GB VRAM? $170? Acquired ✔️

Power

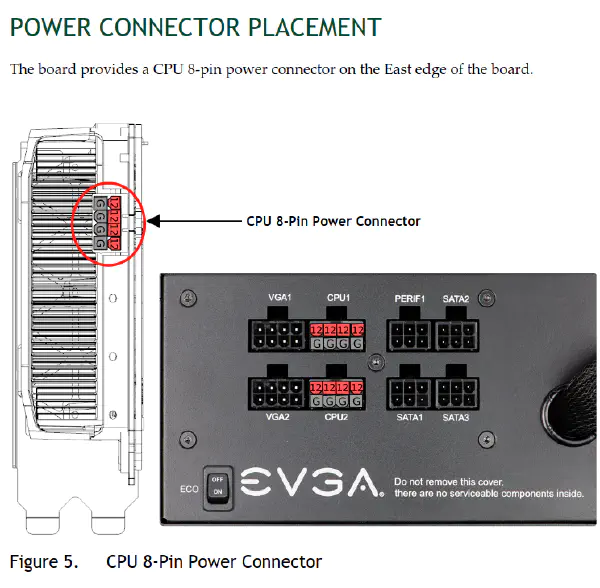

The first thing to know about this little piece of hardware is that it is built for data centers and the server rack form factor. I learned this lesson the hard way.

I wasn’t the only one to figure this out:

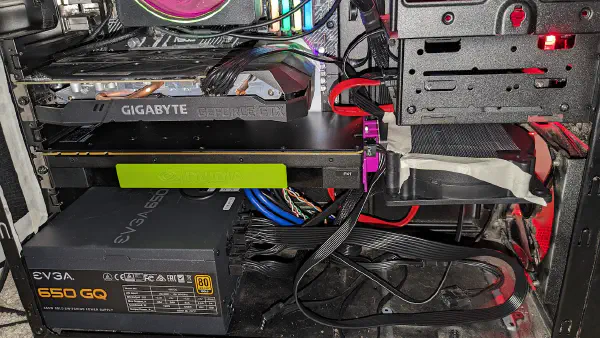

At least I had that warning before making a different mistake. The P40, which uses a PCIe slot, confusingly uses a CPU power connector, a byproduct of the data center-centric design. My EVGA 650G5 power supply only had one CPU connector available, but my partner’s EVGA 650GQ had two. Perfect! I swapped the power supplies, hit the switch, and let the magic smoke out of my two SATA hard drives. Oops. The power cables used, although keyed, are definitely NOT standard, even within the brand! After replacing all the cables with ones marked GQ, the smoke stayed inside all the parts. All my data is backed up, so I only lost the cost of new hard drives and a short panic attack.

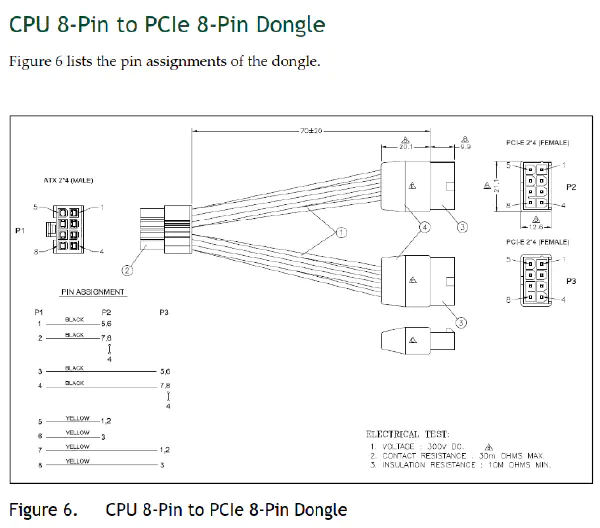

The P40 documentation predicts that some folks may want to use this with a not-so-perfect power supply, so they offer a nifty cable to convert two PCIE plugs to a single CPU plug.

Given my troubles so far, I was not messing with sketchy cables from eBay as a solution. I reverse-engineered that pins 5-8 were 12V, and 1-4 were GND. I had a CPU EPS (4+4) cable that keyed both my power supply and the GPU. Double-checking with a multimeter ensured no shorted connections. I snipped the tab on the 4+4 side of the cable so it fit in the P40 slot. My pinouts below:

Bonus Fans Needed

Staying true to the theme of “this belongs in a server,” you’ll need a fan dedicated to this GPU. This blower fan will ensure that your GPU stays cool and doubles as a leafblower if necessary. Make sure you get the 4-pin version so you can control the speed.

I 3D-printed a connector and taped a screen on top. The entire length of the GPU and fan barely fits in my case.

Linux Fan Control

When setting fan curves in BIOS, I was always confused about why it was tied to utilization, not temperature. Shouldn’t you spin the fan faster if it gets too hot? In any case, the fan needs a socket on the motherboard and a way to know how fast to spin. If you plug it into a Molex connector, it may spin at full speed and be in danger of certification by the FAA. If you choose to forego the fan, it will slowly heat up over the course of about 10 minutes to ~95C, where it will eventually shut down.

I wrote a systemctl service that depends on lm-sensors to control the fan speed. I used this StackExchange post to configure pwm and find which pwm corresponds to my blower fan. It would be best to edit the script below to use the appropriate hwmon and pwm values for your setup.

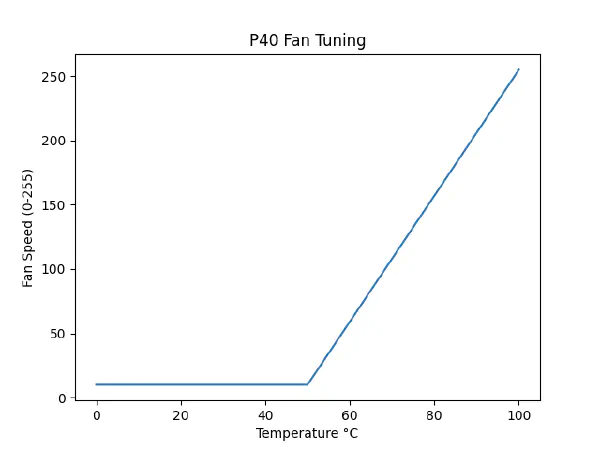

These scripts follow the following basic fan curve. It ensures the fan is on a bit when there is no actual processing. The GPU idles at around 38C. When a model is loaded, it will heat to about ~55C, and when processing ~65C.

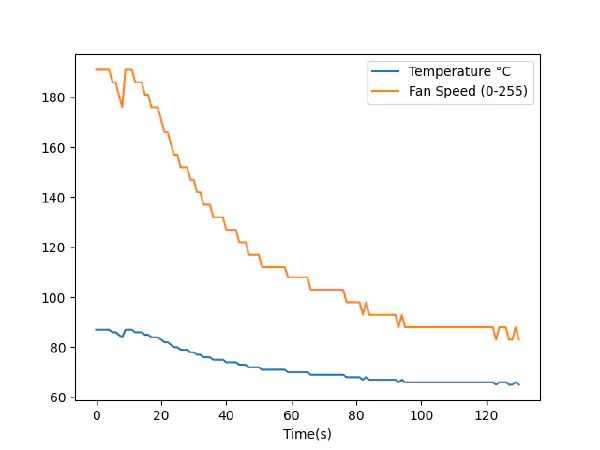

This test was conducted by running inference and enabling the fan control service when the GPU temperature was higher than I was comfortable with. The fan is not even close to saturating, and the response time is very reasonable. Once a steady state is reached, the fan volume is barely audible. My inner controls nerd wants to implement a PID controller, but I won’t let him.

Windows Fan Control

Testing

The state-of-the-art interface for inference is llama.cpp. Other tools are available, like ollama, but I have less experience there. Here’s a test with a practical request using the Deepseek Coder 33B model.

PROMPT> architect a blogging site by dogs, for dogs. dog users must have profile pictures and their reputation is tracked by bones

RESPONSE> Site Name: BarkBone Blog (a work in progress)

Design/Structure:

- Homepage with featured blog posts, trending topics, and most active members of the site.

- A page dedicated to dog news, updates, or interesting articles written by dog enthusiasts.

- A section for users to create an account and profile where they can upload a picture of themselves as well as information about their dogs (age, breed, hobbies, etc.).

- User profiles will have a tracker for bones given out and received, serving as a reputation system.

- Blog page where users can browse through the blog posts. Each post would have a title, picture, short summary of content, date posted, author's profile picture, and author name.

- A page to create a new post with options for including text, photos, videos etc.

- A comment section below each post to enable dog owners to interact or ask questions.

- An about page that gives information about the site's purpose and its features.

Functionality:

- Users can register, log in and manage their profiles which include a profile picture, a short bio, the dogs they have written about, etc.

- They will be able to create blog posts on a variety of topics related to dogs and their respective breed details.

- Users can give bones to other users' posts or comments that helped them or the post itself. This action would increase the bone counter in the author's profile.

- The site will have a search function to find specific blog posts based on tags, authors, date, etc.

- The site will also include sorting options for blog posts (latest, oldest, highest bones received) and profiles(highest bones received, lowest bones given).

Technologies:

- Backend: Node.js with Express.js framework to build the server-side logic and MongoDB for database storage.

- Frontend: HTML/CSS with React.js for interactive UI components.

- Hosting: Heroku or similar platform for easy deployment and scalability.

- Image Storage: AWS S3 or Google Cloud Storage for safe, efficient image uploads and serving.

- Authentication: JWT for user authentication and security.

- API Testing: Postman for testing backend APIs.

- DevOps: GitHub Actions (CI/CD) for automated deployment.

Statistics

llama_print_timings: load time = 7556.22 ms

llama_print_timings: sample time = 400.30 ms / 1513 runs ( 0.26 ms per token, 3779.68 tokens per second)

llama_print_timings: prompt eval time = 1975.48 ms / 41 tokens ( 48.18 ms per token, 20.75 tokens per second)

llama_print_timings: eval time = 169084.44 ms / 1514 runs ( 111.68 ms per token, 8.95 tokens per second)

llama_print_timings: total time = 206937.81 ms / 1555 tokens

why?

ChatGPT is free! Absolutely use it because it is excellent and it works. I want to run a local model because I’m interested in finding differences between models and integrating code I’ve written. I want to incorporate LLMs into my home automation system. I use Tabby with Neovim as a “copilot”. Tabby is pretty cool because of its retrieval augmented generation, it preprocesses your codebase into symbols which are included in each prompt. See my Neovim configuration in my dotfiles.

I’m unsure how OpenAI, Microsoft, Google, or Facebook will change. Will they decide to train on my data? Will my questions contribute to a system operating with extreme bias? It’s great that these corporations operate with ‘safety’ in mind, but ‘safety’ exists as long as it aligns with their strategy.